XR Terms You Need To Know

Many of you reading this document are going to be new to some of the technologies and concepts referenced. Therefore, let’s start with a short explanation of some of these:

VR + AR + MR = XR (One Acronym to Rule Them All)

The catch-all term ‘eXtended Reality’ (XR) encapsulates Augmented Reality (AR), Virtual Reality (VR), Mixed Reality (MR), and all the associated immersive technologies that power these. We define some of these terms below.

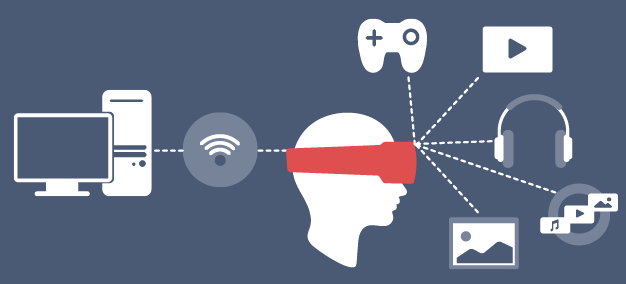

VR (Virtual Reality)

Virtual Reality is an artificial digital environment that completely replaces the real world. When you put on a VR headset, everything you see has been generated by a computer. However, the concept is becoming increasingly complex and nuanced as the boundaries between Virtual and Augmented Reality blur, and the two modes merge at various points of the “immersive spectrum.” Some VR headsets – such as the Oculus Quest – now have external cameras that display a user’s real world surroundings (to stop them from colliding with walls or furniture, for instance). This “pass-through” functionality lets you combine physical and digital elements into blended environments, sometimes referred to as “Mixed Reality” (more on this later). For the purpose of providing a definition, however, we will refer to VR devices as those that primarily display artificial digital environments.

360-Degree Video

360-degree videos are a common type of immersive media – widely available on platforms such as YouTube – made by “stitching together” captures of the real world. These captures are generated by special camera rigs with multiple lenses that simultaneously record everything around the camera position. For the purposes of this publication, we consider 360-degree videos to be different from VR environments.

Augmented Reality (AR)

Augmented Reality (AR) is the overlay of digital content on top of your current view of the real-world environment surrounding you. This can be experienced through either wearable “smart glasses,” or mobile devices such as smartphones and tablets. There are currently many companies actively developing AR wearables, but the challenges of housing the necessary display resolution and processing power in a device that is comfortable, affordable, and something that users wouldn’t be embarrassed to wear in public are significant. The most pervasive AR applications, therefore, tend to be designed for handheld devices, as they bridge the gap while smart glasses technology catches up to where it needs to be in order to trigger widespread consumer adoption.

That being said, when you are alone at home using headworn AR devices, no one is going to care what the device looks like – or even that you are in your pajamas. There are new devices arriving – Nreal Light for example – that are affordable, functional, and stylish. We would have seen a significant quantity of these devices coming to market in Q1/Q2 2020, but due to supply chain disruptions from factory shutdowns caused by the Covid-19 pandemic, we expect these products to be available in Q3/Q4 2020 instead.

Mixed Reality (MR)

Mixed Reality (MR) enables you to interact with and manipulate both physical and virtual items and environments, using next-generation sensing and imaging technologies. Mixed Reality allows you to see and immerse yourself in the world around you, even as you interact with a virtual environment using your own hands – all without ever removing your headset. It provides the ability to have one foot (or hand) in the real world, and the other in an imaginary place; breaking down basic concepts between real and imaginary, offering an experience that can change the way you communicate.

For the purposes of this publication, the term Augmented Reality (AR) will also encompass Mixed Reality (MR).

Head-Mounted Display (HMD)

A head-mounted display (HMD) is a display device, worn on the head or as part of a helmet, that has a small display optic in front of one eye (monocular HMD) or each eye (binocular HMD). A binocular HMD has the potential to display a different image to each eye. This can be used to show stereoscopic images – two offset images displayed separately to the left and right eye. These two-dimensional images are then combined in the brain to give the perception of 3D depth. Monocular HMDs are not recommended for XR collaboration purposes.

HMDs may be used to view a see-through image imposed upon the real world view, creating what is called Augmented Reality. Most HMDs are mounted in a helmet (VR HMD) or a set of goggles (AR HMD). Although there are innovations to enable access to Augmented Reality experiences using a form of contact lens, these are still years away from commercial reality. Please see the “XR Device Hardware” section for more details.

XR Collaboration

XR collaboration refers to the use of XR technologies to bring groups of people together for remote activities, such as meetings, conferences, design reviews, classroom sessions, and more. Using XR technologies, individuals and organizations now have the ability to communicate in a much more visceral and connected way, engendering a greater sense of physical presence through the use of head-worn devices.

Note: Handheld device and computer users also have the ability to join these events, but with far less immersion. In particular, you have to do a lot more work to control your “camera” position or your rendered point of view. This makes interacting more of an effort.

Degrees of Freedom

Previously in XR, users have only been able to look up, down, left, or right in the X/Y/Z axes, yielding three degrees of freedom (3DoF). This was adequate for stationary 360-degree video experiences, but underwhelming when it came to providing full immersion. By unlocking movement on the X/Y/Z axes, in addition to looking in these directions, you can also move in them, giving you six degrees of freedom (6DoF). This enables a much more immersive user experience, since there is real-time connection between physical movement and visual perception. It also minimizes issues with “simulator sickness,” which can arise from virtual experiences.

SLAM

Simultaneous Localization And Mapping is the technology that enables Six Degrees of Freedom by positioning you relative to your environment. Where 3DoF allows you to only Pitch, Yaw, and Roll your head, SLAM adds Up/Down Left/Right and Back/Forth. These additional degrees of freedom give you the ability to move around where virtual objects stay still, allowing you to observe them from different perspectives. The motion sensors mounted on headsets, or externally, detect your position and combine with camera or other sensors to position you in an unknown environment. Many AR HMDs provide onboard SLAM algorithms but there are also solutions where the SLAM is performed on the network (e.g. edge computing.)